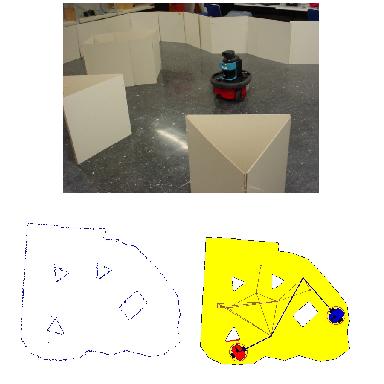

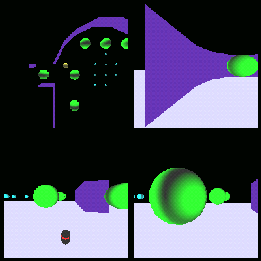

| Next best view simulation: Here the robot trajectory and sensing locations were computed by using genetic algorithms | Multi-robot map building simulation case of omnidirectional field of view and unlimited range | Multi-robot map building simulation case of 180 deg of field of view and limited range |

|

|

|

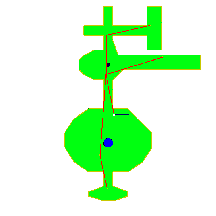

| Model Matching: real laser data | Laser data at time T and T+1 | Data Matching by using the partial Haussdorf distance |

|

|

|